Run AI text & image generation locally

My notes on how I got AI models working on my local Debian desktop, with an NVIDIA RTX 2060 with 8GB VRAM.

Prerequisites

- NVIDIA proprietary Linux drivers: Debian 12 instructions

- Docker:

aptinstructions- For ease of use, I recommend not requiring

sudofordockercommands. (instructions)

- For ease of use, I recommend not requiring

- NVIDIA Container Toolkit

Verify the NVIDIA GPU works in Docker

docker run --rm --runtime=nvidia --gpus all ubuntu nvidia-smiExample output

Thu May 8 16:57:51 2025

+---------------------------------------------------------------------------------------+

| NVIDIA-SMI 535.216.01 Driver Version: 535.216.01 CUDA Version: 12.2 |

|-----------------------------------------+----------------------+----------------------+

| GPU Name Persistence-M | Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap | Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|=========================================+======================+======================|

| 0 NVIDIA GeForce RTX 2060 ... On | 00000000:08:00.0 On | N/A |

| 0% 49C P8 11W / 175W | 518MiB / 8192MiB | 1% Default |

| | | N/A |

+-----------------------------------------+----------------------+----------------------+

+---------------------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=======================================================================================|

+---------------------------------------------------------------------------------------+Text Generation (chat with an LLM)

I settled on ollama, which provides a simple terminal interface.

(Linux install instructions)

Llama 3.2 worked well on my 8GB card:

ollama run llama3.2Example output

>>> Hello! What model are you?

Hello! I'm an artificial intelligence model known as Llama. Llama stands for "Large Language Model Meta AI." It's a type of large

language model that is trained on a massive dataset of text to generate human-like responses to a wide range of questions and prompts.

I'm constantly learning and improving my abilities to provide more accurate and helpful information to users like you!Generate images

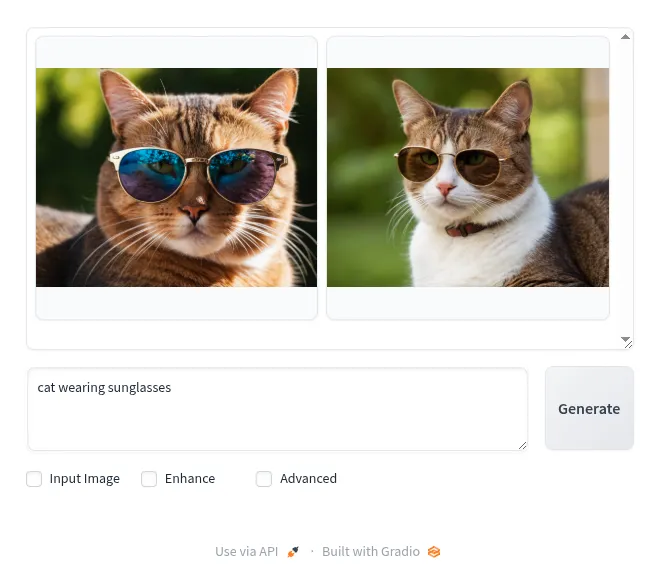

I chose Fooocus, which offers a nice, simple web UI, and ran it in Docker. (Docker instructions)

I recommend tweaking one part of the (large) docker command - instead of a Docker volume,

I recommend using a “bind mount” so that the data gets stored in a local directory.

(Docker volume documentation.) However,

assuming you’re running docker engine as root (the default), you need to manually create

the directory first (otherwise it’s created by root and can’t be accessed by the container user):

mkdir fooocus-dataThen run the container:

docker run -p 7865:7865 -v ./fooocus-data:/content/data -it \

--gpus all \

-e CMDARGS=--listen \

-e DATADIR=/content/data \

-e config_path=/content/data/config.txt \

-e config_example_path=/content/data/config_modification_tutorial.txt \

-e path_checkpoints=/content/data/models/checkpoints/ \

-e path_loras=/content/data/models/loras/ \

-e path_embeddings=/content/data/models/embeddings/ \

-e path_vae_approx=/content/data/models/vae_approx/ \

-e path_upscale_models=/content/data/models/upscale_models/ \

-e path_inpaint=/content/data/models/inpaint/ \

-e path_controlnet=/content/data/models/controlnet/ \

-e path_clip_vision=/content/data/models/clip_vision/ \

-e path_fooocus_expansion=/content/data/models/prompt_expansion/fooocus_expansion/ \

-e path_outputs=/content/app/outputs/ \

ghcr.io/lllyasviel/fooocusOnce it downloads a bunch of data / models, it spits out a local URL to access the web UI:

App started successful. Use the app with http://localhost:7865/ or 0.0.0.0:7865Et voilà! Image generation takes me roughly 30 seconds, but you can tweak the settings to be faster or higher quality.